1

New Punctuation Restoration and Truecasing models, PCM Mu-law support

We’ve released new Punctuation and Truecasing models, achieving significant improvements for acronyms, mixed-case words, and more.

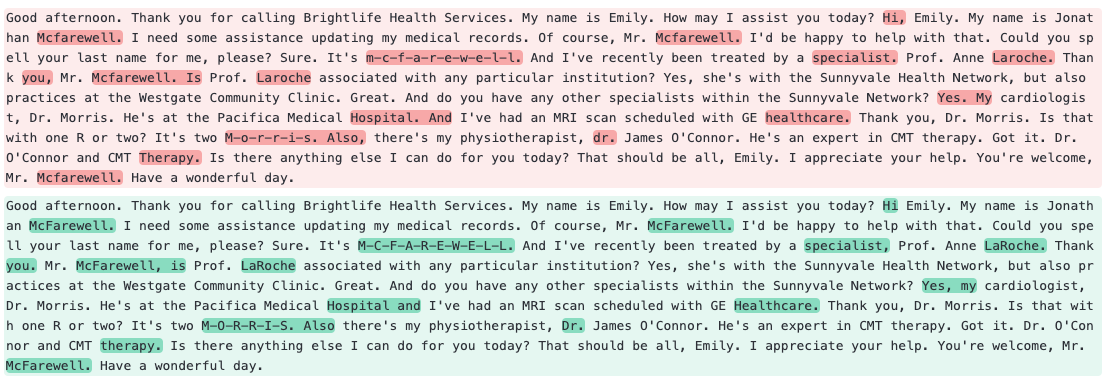

Below is a visual comparison between our previous Punctuation Restoration and Truecasing models (red) and the new models (green):

Going forward, the new Punctuation Restoration and Truecasing models will automatically be used for async and real-time transcriptions, with no need to upgrade for special access. Use the parameters punctuate and format_text, respectively, to enable/disable the models in a request (enabled by default).

Read more about our new models here.

Our real-time transcription service now supports PCM Mu-law, an encoding used primarily in the telephony industry. This encoding is set by using the `encoding` parameter in requests to our API. You can read more about our PCM Mu-law support here.

We have improved internal reporting for our transcription service, which will allow us to better monitor traffic.